5.1 In Vivo Approach

In vivo approaches (in vivo methods) for estimating the oral bioavailability of contaminants in soil involve ingestion of soil by living subjects under controlled conditions, followed by assessment (by any of a variety of means) of the systemically absorbed fraction. The subjects can be humans, but typically are laboratory animals. The literature includes many examples of in vivo bioavailability studies using different species, experimental designs, and measurement endpoints.

5.1.1 Human Studies

The obvious advantage of conducting a bioavailability study in humans is that the uncertainty involved in extrapolating results from a surrogate species is eliminated. Classical bioavailability measurement endpoints suitable for most chemicals (measurement of concentrations of parent chemical or metabolites in blood and urine) can be used. The bioavailability estimates obtained for individual subjects then indicate the extent to which the estimates might vary in a population. While human bioavailability data for environmental contaminants are highly desirable, there are significant obstacles to conducting a study in humans, the greatest being the ethical issue of intentionally exposing a human subject to a potential toxicant.

Soil bioavailability studies in humans must present little risk to the subjects. The doses of contaminant administered in soil must be low and, ideally, close to those encountered in common, unintentional exposures to the contaminant. This constraint makes measuring bioavailability from the soil difficult, which is further complicated by issues such as analytical detection limits and discriminating dose from background exposure. One study of arsenic bioavailability from soil attempted to reduce background arsenic exposure by having human subjects consume a low-arsenic diet before receiving the arsenic soil dose (Stanek et al. 2010). The result was only partially successful; although a bioavailability estimate from a soil sample was obtained, background interference remained a substantial problem. Another study solved this problem with a clever design that took advantage of the multiple isotopic forms of lead that exist in nature (Maddaloni et al. 1998). Using the isotopic profile of lead in a soil dose, researchers could distinguish this lead from background intake using isotopic dilution and then measure bioavailability of the soil lead in individuals fed and fasted subjects. Unfortunately, few other substances have the natural isotopes that make this approach feasible.

In theory, it may be possible to distinguish a low, administered dose of a chemical in soil from background in humans using stable isotope-labeled material. This approach, which is different from using natural isotopic forms of lead described above, would involve spiking a sample of soil from a contaminated site with a stable isotope labeled form of the contaminant and determining the RBA of the stable isotope-labeled material. The critical assumption in this approach is that any interactions between the contaminant and the soil at the site that can influence bioavailability, including those that occur during weathering, can be effectively duplicated with the spike, so that bioavailability results representative of site soil contamination can be obtained. Even if the soil dose can be effectively distinguished from background with the stable isotope label, it may be difficult to accurately measure the absorbed dose if the dose that can be safely administered to a human subject is low. No examples using this approach to assess bioavailability from soil in human subjects are currently available in the literature.

Another consideration is cost and time required to conduct the study. Institutional Review Board (typically a unit of a university system that assures the legal compliance of the work, as well as the safety and ethical treatment of human subjects in research projects) approval of a bioavailability study in humans may require monitoring of the subjects in a clinical setting, with its attendant costs. Also, time required to recruit volunteers for the study must be considered with respect to the overall project schedule.

Because of these various obstacles, studies determining the RBA of contaminants in humans are extremely limited. In fact, the only studies available are the ones cited above.

5.1.2 Animal Studies

Although interspecies extrapolation always entails uncertainty, there are advantages to using animal surrogates to assess the bioavailability of soil contaminants in vivo. Practically speaking, there is much greater latitude in the chemicals that can be evaluated and the doses that can be administered, if overt toxicity in the subjects is not produced. The presence of overt toxicity can confound measurement of bioavailability and should be avoided if possible. Also, the use of animal subjects allows more invasive endpoints to be used if needed (such as measurement of tissue concentrations), which is generally not feasible in humans.

5.1.2.1 Experimental Design

There are several aspects of experimental design to consider, including:

- appropriate soil particle size

- relevant comparison group

- linearity of pharmacokinetics

- repeated versus single dose

- measurement of parent compound, metabolites, or both

- adequate number of subjects

- relevant concentrations/doses, number of different doses

- ability to demonstrate full range of RBA

- average versus individual subject RBA measurements

- mass balance

Appropriate soil particle size. Read More

Relevant comparison group. Read More

Linearity of pharmacokinetics.Read More

Nonlinearity typically occurs when the doses extend into a range where saturable or capacity-limited uptake or metabolic processes occur (Shargel and Yu 1999). A bioavailability study must demonstrate, or have good reason to assume, that the doses being used result in linear pharmacokinetics, or that RBA is calculated using appropriate nonlinear models that relate absorbed dose to external dose. For example, in the swine assay, RBA is calculated from blood lead area under the curve (AUC) using a regression model of the nonlinear relationship between dose and AUC.

Repeated versus single dose. Read More

If repeated doses produce a change in the rate of elimination of the chemical, for example, from metabolic enzyme induction (metabolic clearance), then determining relative bioavailability becomes complex. A change in metabolic clearance changes the relationship between the systemically absorbed dose and bioavailability endpoints such as blood, tissue, and urine concentrations. For example, an increased rate of metabolic clearance from induction (adaptive increase in the metabolism of a chemical) after several doses can lower blood concentrations, even if the bioavailability is unchanged. This situation is analogous to the problem that occurs with nonlinear pharmacokinetics, in which the consistent relationship between systemically absorbed dose and the endpoint being measured is lost. For valid comparisons among treatment groups to be used to estimate relative bioavailability, the metabolic clearance of the chemical should be the same for each of the groups.

Dose-related enzyme induction complicates the comparisons between treatment groups. One way to avoid this problem is to evaluate bioavailability after a single dose. Metabolic clearance should be similar among naïve (previously untreated) animals given a single dose, allowing more straightforward comparisons among treatment groups. This approach addresses the goal of determining the influence of a soil matrix on delivery of a systemically absorbed dose (the RBA) without the confounding influence of downstream events such as changing metabolic clearance.

Measurement of parent compound, metabolites, or both. Read More

Metabolism of the chemical may be different in the animal model than in humans, and consequently the metabolite to be measured to capture most of the absorbed dose may be different from what would be selected if the study were conducted in humans. If differences in metabolism are recognized and taken into consideration, then they do not compromise the animal model, because the focus of bioavailability studies is on relative absorption rather than metabolism.

Adequate number of subjects. Read More

The number of animals included in each dose group may be used to inform the study design. For example, use of a crossover study design, in which each animal is used to generate data for both the soil dose and the reference dose, can increase statistical power compared to dosing separate soil and reference groups of animals. This practice assumes adequate depuration (elimination from the body) between trials and that considerations such as enzyme induction are not involved, which can occur especially with organics. Therefore, crossover studies should demonstrate that enzyme induction (if relevant) and depuration have returned to baseline state between dosing with site and reference soils.

Relevant concentrations/doses, number of different doses. Read More

Ability to demonstrate full range of RBA. Read More

Average versus individual subject RBA measurements. Read More

Mass balance. Read More

5.1.2.2 Specific Animal Models

- Similarity in gastrointestinal (GI) physiology between the animal surrogate and target human population of interest. Are the processes important for release of the chemical of interest from soil and its biological uptake sufficiently alike between the animal model and humans that similar soil bioavailability is expected?

- Relevance to the critical study for which the toxicity value for the chemical was developed. How well does the model and bioavailability adjustment factor being sought link to the original study underpinning the risk estimates?

- Cost of the animal subjects (an important component of the overall cost of a bioavailability study). This cost factors in determining the feasibility of doing a bioavailability study to support a risk assessment.

- Regulatory acceptance. Regulatory agencies may have strong preferences regarding the most appropriate animal model to be used to derive bioavailability data for a given chemical. As a practical matter, be aware of these preferences and take them into consideration when planning or evaluating a study.

Species commonly used for assessing relative bioavailability of contaminants from soil include the following:

- Primates. The principal advantage of primates is their phylogenetic similarity to humans. The principal disadvantages are cost (see Table 4-1 in section 4.4.1.2, for information about costs), which limits treatment group sizes, and the relative impracticality of using more invasive measurement endpoints such as tissue concentrations. The experimental design used for primates involves developing RBA estimates for individual subjects.

- Swine. The principal advantage of the swine model is good similarity in GI physiology to humans. Cost of subjects (see Table 4-1 for information about costs) is less than primates, but more than rodents. The design used for most swine studies provides an estimate of average RBA with confidence intervals, but not intersubject variability.

- Laboratory rodents. The principal advantage of using laboratory rodents is low cost (see Table 4-1 for information about costs), allowing for large treatment group sizes and lower overall study cost. The principal disadvantage is that GI physiology for rodents is dissimilar in some respects from humans, which may be an issue for some chemicals. As with studies using swine, the typical experimental design provides an estimate of average RBA with confidence intervals.

- Other species. A variety of other species have also been used occasionally for soil bioavailability studies, including rabbits, hamsters, and goats. The principal disadvantages of these models are GI physiology dissimilar to humans and sparing use in the field, which may limit their acceptance.

Studies comparing RBA estimates from different animal models for the same soils are limited, but suggest they do not necessarily yield equivalent results. For example, estimates of the oral bioavailability of dioxins and furans from two soils differed between rats and swine (Budinsky et al. 2008). In rats, the RBA values for the two soils were 0.37 and 0.60 compared to administration in corn oil, while the RBA values for these soils in swine compared with corn oil were 0.20 and 0.25. A comparison of arsenic RBA values from nine soil samples between mouse and swine models had a correlation coefficient (r2) of 0.49, with swine producing RBA values that were on average about 50% higher (Bradham et al. 2013). Results from four soil samples from the same site could be compared among mouse, swine, and monkey models in this study. The average RBA for the four soil samples was similar for mouse and monkey (0.29 and 0.31, respectively) and somewhat higher for the swine (0.44). The reason for these differences is unclear, and could result either from species differences in gastrointestinal tract processing and uptake of the soil dose or from the way the studies were conducted, such as the manner of soil dosing.

In the absence of empirical data to determine which model/study design produces results that best predict oral bioavailability in humans, species can be chosen by assessing their comparative advantages and disadvantages. Table 5-1 summarizes the advantages and disadvantages of these various in vivo models.

Table 5‑1. Comparison of in vivo models

| Species | Major Advantages | Major Disadvantages |

|---|---|---|

| Humans |

|

|

| Nonhuman primates |

|

|

| Swine |

|

|

| Laboratory rodents |

|

|

| Other species |

|

|

5.1.2.3 Endpoints

Blood. Read More

RBAs are calculated from AUC data using the following formula:

![]()

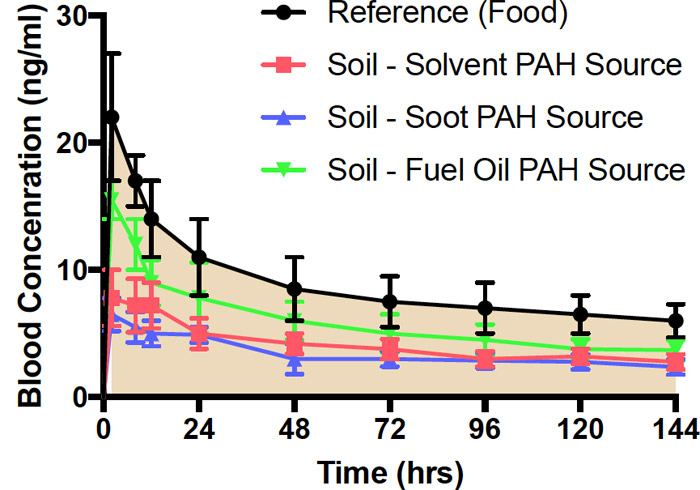

where the comparison dose is given in food, water, oral dose vehicle, or other medium as appropriate (see Relevant Comparison Group). If the dose from soil and the comparison dose are equivalent, then these terms cancel and the RBA is simply the ratio of the AUCs from soil and the comparison group. If the doses are not equal, then the AUCs can be corrected, as in the formula above. This correction can only be made, however, if the doses are in the linear pharmacokinetic range. Figure 5-1 shows as an example a comparison of blood concentration versus time profiles for benzo(a)pyrene (BaP), given in the same dose in food versus in soil from three difference sources (solvent, soot, and fuel oil). These blood concentration curves, and the AUCs derived from them, show reduced benzo(a)pyrene from soil regardless the benzo(a)pyrene source.

Figure 5‑1. Blood concentrations over time following oral administration of benzo(a)pyrene(BaP) in food or weathered soil in rats.

Source: Figure derived from data published in (Roberts et al. 2016).

The black reference line is the blood concentration over time of benzo(a)pyrene (BaP) and metabolites following administration of a single dose of BaP in food by oral dose vehicle. Blue, red, and green profiles are for the same dose of BaP from weathered soils with solvent, soot, or fuel oil as the BaP source. BaP concentration in food and soil samples was 10 ppm. Results are expressed as mean ± standard error of the mean (SEM), N=5 (Roberts et al. 2016).

Occasionally, attempts are made to estimate bioavailability from a blood concentration taken from a single time point. This approach assumes that the shape of the blood concentration over time profile is the same regardless the dosing medium, and that the ratio of blood concentrations at a given time point for soil and for comparison doses accurately reflects the ratio of their respective AUCs. Often, however, the shape of the blood concentration versus time profile changes when a chemical is given orally in different media, even when the total dose that is systemically absorbed over time is the same. For example, the rate of absorption from different dosing media might vary, causing the peak blood concentration to occur at a different time. The ratios of blood concentrations at most individual time points then can have little or no relationship to the ratio of the AUCs. Consequently, this approach is not recommended unless the pharmacokinetics of the chemical from the relevant oral dosing media are the same as the pharmacokinetics from soil, the pharmacokinetics are well characterized in the animal model (which is seldom the case), or the chemical has been dosed repeatedly and the blood concentrations are at steady-state.

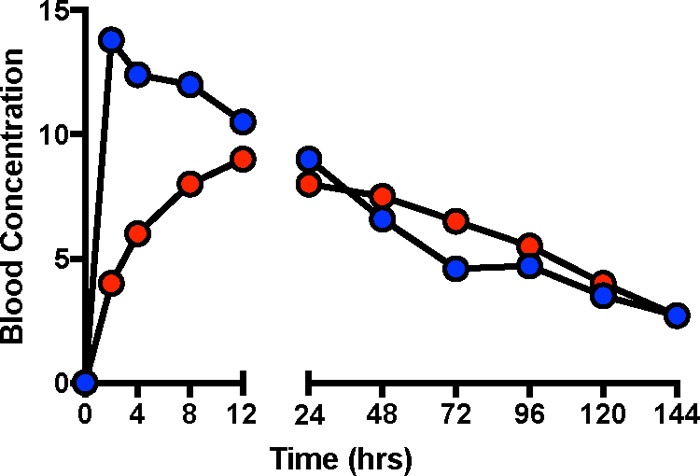

Figure 5-2 shows hypothetical blood concentration versus time profiles for the same chemical from two different environmental matrices. The blue curve shows that absorption was rapid from the environmental matrix, while the red curve shows that release and absorption occurred more slowly. The slower release and absorption results in lower concentrations at earlier time points but somewhat higher concentrations at later time points. The extent of absorption—the bioavailability—is the same from both matrices, and both profiles have the same AUC. If bioavailability had been determined by measuring blood concentrations at a single time point, then a sample taken less than 12 hours after the dose could have led to the erroneous conclusion that bioavailability was substantially greater for the blue line conditions. Similarly, a sample taken after 24 hours might lead to the erroneous conclusion that bioavailability was somewhat greater for the red line conditions.

Figure 5‑2. Different blood concentration profiles despite the same bioavailability.

Figure 5-2 shows hypothetical blood concentrations curves for the same chemical ingested in two different matrices (such as food and soil), with initial absorption more rapid from one of them. In this example, although the blood concentration curves over time differ, the absorbed dose is the same—the curves have the same AUC. This example illustrates the problem of comparing absorbed doses under two sets of conditions by looking at blood concentrations at a single time.

Excreta (urine and feces). Read More

![]()

Where: U is the total mass excreted in urine

As with blood data, urinary excretion can be corrected if different doses are used in the soil and comparison groups, but only if the pharmacokinetics are assumed or shown to be linear.

Excretion of chemical in the feces can also be used to estimate the systemically absorbed dose if the mass excreted in feces can be assumed to represent unabsorbed dose from the gastrointestinal tract. Biliary excretion confounds this approach by reintroducing absorbed chemical, usually in the form of metabolites, into the gut where it can be eliminated in feces along with unabsorbed material. Microbial metabolism of the chemical within the GI tract can also be a confounding influence, complicating quantification of what exactly represents absorbed versus unabsorbed chemical. This approach works best when biliary excretion of the chemical is negligible and microbial metabolism is limited.

Experiments in which excreta are used as endpoints are conducted in metabolism cages to facilitate the separation and collection of urine and feces. Collection surfaces within the cages are usually plastic, glass, or Teflon. The choice of material can be an important consideration, because some chemicals or metabolites stick to certain surfaces (for example, PAH metabolites to plastic), resulting in incomplete recovery. Cage designs also vary in the efficiency with which feces and urine are separated. No design is perfect, and some degree of cross-contamination is expected. This contamination poses a problem when the excreta that is not the measurement endpoint has enough of the chemical or metabolites present to cause significant contamination. An example of this effect is presented in the PAHs chapter, where the small mass of metabolites present in urine may be significantly affected by even small contributions from fecal contamination.

Tissues.Read More

Other endpoints. Read More

5.1.2.4 Components and Documentation of In Vivo Methods

- a detailed description of how the test was conducted—the species, gender, age, and number of animals

- the basic experimental design

- the number of soils tested, their sampling locations, the concentrations of the chemicals being evaluated, the size of the soil dose, and the manner of administration

- the nature of the comparison dose and how it was prepared (for example, in food, water, or some other vehicle), the rationale for why it is the most relevant basis for comparison, and the concentrations/doses tested

- the biological fluids/tissues used as endpoints and the method of collection

- the method of bioavailability calculation and a description of the statistical treatment of the data

Analytical laboratory reports for measurement of chemicals and metabolites in soil and biological tissues should be included and supply the usual QA/QC information on accuracy and reproducibility, limits of detection and quantitation, and results from appropriate blanks.